How We Rank AI Tools

We don't just list tools—we stress-test them.

Every product on WeeklyAITools must pass our rigorous 3-Layer Vetting Process, designed to separate reliable, enterprise-ready tools from shallow wrappers, abandoned projects, or risky vendors.

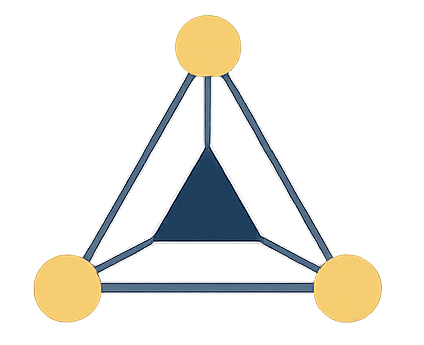

The 3-Layer Vetting Process

Every tool must pass all three layers before it appears in our directory.

The Alive Test

We confirm the tool is real, active, trustworthy, and not a low-effort clone.

A tool must demonstrate:

- Active development and recent updates

- Transparent pricing and clear usage terms

- Verifiable company presence (team, site, socials, contact)

- Clear product differentiation (not a thin wrapper on GPT/Claude)

- Sufficient brand presence across reputable domains

If a tool fails the Alive Test, it is never listed.

The Feature Audit

We evaluate the tool's business readiness against five essential operational criteria.

API Access

Is the product integratable? Is the infrastructure real, stable, and documented?

Data Privacy

Does the tool explain how data is stored, processed, and secured?

Support Response

Does the company actually reply? How quickly? Is there a real support channel?

Output Speed

Does the tool perform reliably under load—not just in their marketing demo?

Community Activity

Is there real user adoption, ongoing discussion, and active engagement?

Behind the scenes, we also factor in company maturity, product depth, technical documentation, integration ecosystem, and update cadence—but without exposing internal scoring logic.

The "Real World" Stress Test

We don't trust marketing pages.

We run practical, repeatable tests to confirm the tool works as claimed.

Example tests we run:

- →Generate a structured 2,000-word article with specific formatting

- →Process 100+ customer support tickets in a batch

- →Analyze a long-form PDF and extract actionable insights

- →Maintain stable performance during 24-hour continuous operation

- →Validate accuracy, consistency, and failure handling

Only tools that perform consistently across multiple trials make it through this layer.

Our Trust Principles

Independent Testing

We test every tool ourselves—never relying solely on vendor claims.

Real-World Use Cases

We evaluate tools the way real businesses use them, not through superficial demos.

Transparent Scoring

Our scoring model is structured, consistent, and applied uniformly—without revealing proprietary weights or formulas that vendors could manipulate.

Quarterly Re-Evaluation

AI tools evolve fast. Every tool is rescored to reflect new features, pricing, and performance.

The Mark of Quality

A tool earns our Verified badge only if it passes all three evaluation layers and demonstrates reliability during our hands-on testing period.

Requirements include:

- High performance across all evaluation criteria

- Clear and transparent pricing

- Sufficient customer support availability

- Demonstrated product depth and stability

- Strong real-world performance during our 30-day testing window

Only a small percentage of tools meet this standard.

Affiliate Disclosure

Weekly AI Tools may earn commissions when you purchase tools through our affiliate links. These partnerships help us maintain our free directory and conduct independent testing. However, affiliate relationships do not influence our editorial process, scoring, or recommendations. Tools are evaluated using the same criteria regardless of affiliate status.